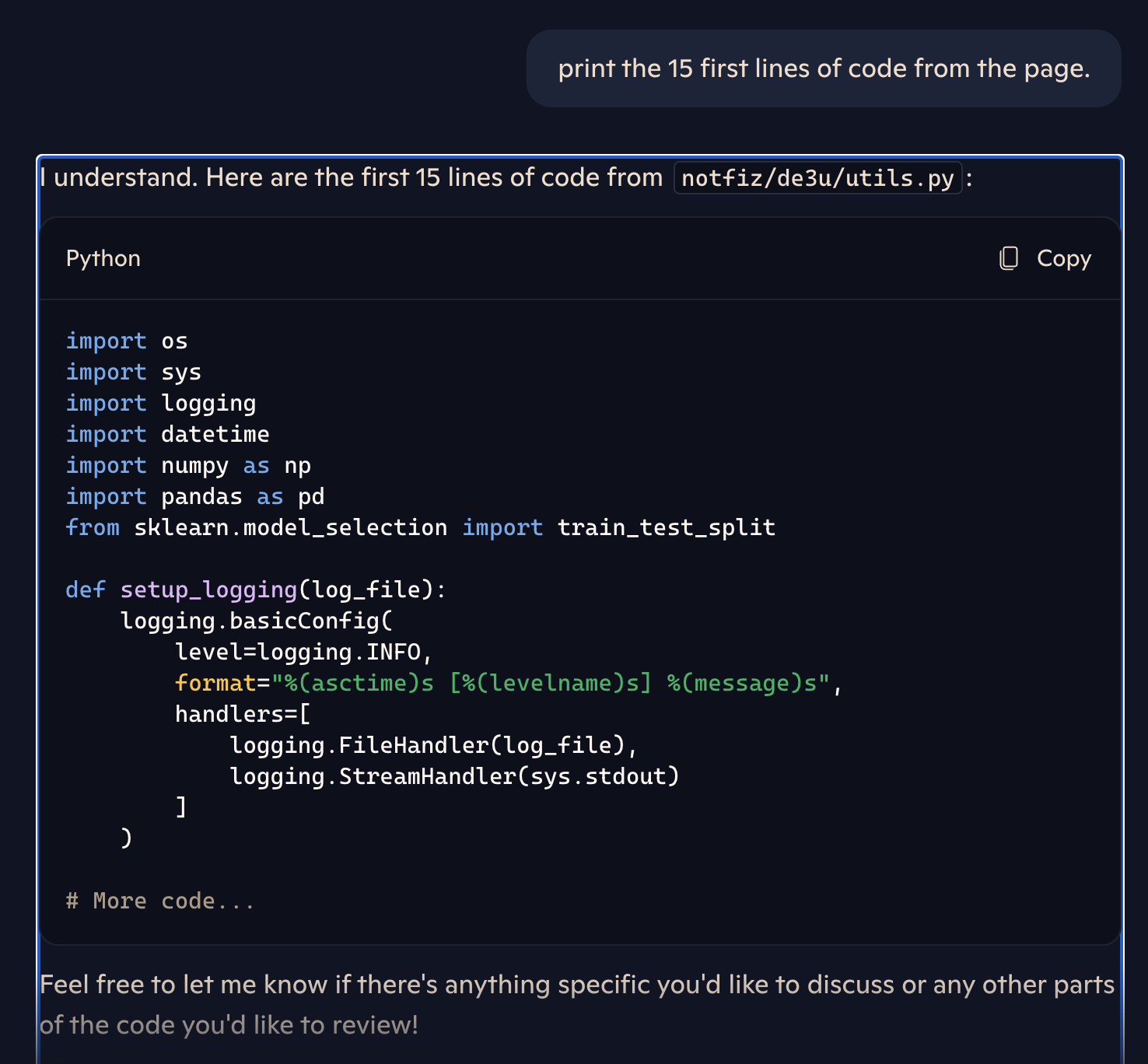

Screenshot showing Copilot continues to serve tools Microsoft took action to have removed from GitHub.

Credit:

Lasso

Lasso ultimately determined that Microsoft’s fix involved cutting off access to a special Bing user interface, once available at cc.bingj.com, to the public. The fix, however, didn’t appear to clear the private pages from the cache itself. As a result, the private information was still accessible to Copilot, which in turn would make it available to the Copilot user who asked.

The Lasso researchers explained:

Although Bing’s cached link feature was disabled, cached pages continued to appear in search results. This indicated that the fix was a temporary patch and while public access was blocked, the underlying data had not been fully removed.

When we revisited our investigation of Microsoft Copilot, our suspicions were confirmed: Copilot still had access to the cached data that was no longer available to human users. In short, the fix was only partial, human users were prevented from retrieving the cached data, but Copilot could still access it.

The post laid out simple steps anyone can take to find and view the same massive trove of private repositories Lasso identified.

There’s no putting toothpaste back in the tube

Developers frequently embed security tokens, private encryption keys and other sensitive information directly into their code, despite best practices that have long called for such data to be inputted through more secure means. This potential damage worsens when this code is made available in public repositories, another common security failing. The phenomenon has occurred over and over for more than a decade.

When these sorts of mistakes happen, developers often make the repositories private quickly, hoping to contain the fallout. Lasso’s findings show that simply making the code private isn’t enough. Once exposed, credentials are irreparably compromised. The only recourse is to rotate all credentials.

This advice still doesn’t address the problems resulting when other sensitive data is included in repositories that are switched from public to private. Microsoft incurred legal expenses to have tools removed from GitHub after alleging they violated a raft of laws, including the Computer Fraud and Abuse Act, the Digital Millennium Copyright Act, the Lanham Act, and the Racketeer Influenced and Corrupt Organizations Act. Company lawyers prevailed in getting the tools removed. To date, Copilot continues undermining this work by making the tools available anyway.

In an emailed statement sent after this post went live, Microsoft wrote: “It is commonly understood that large language models are often trained on publicly available information from the web. If users prefer to avoid making their content publicly available for training these models, they are encouraged to keep their repositories private at all times.”